|

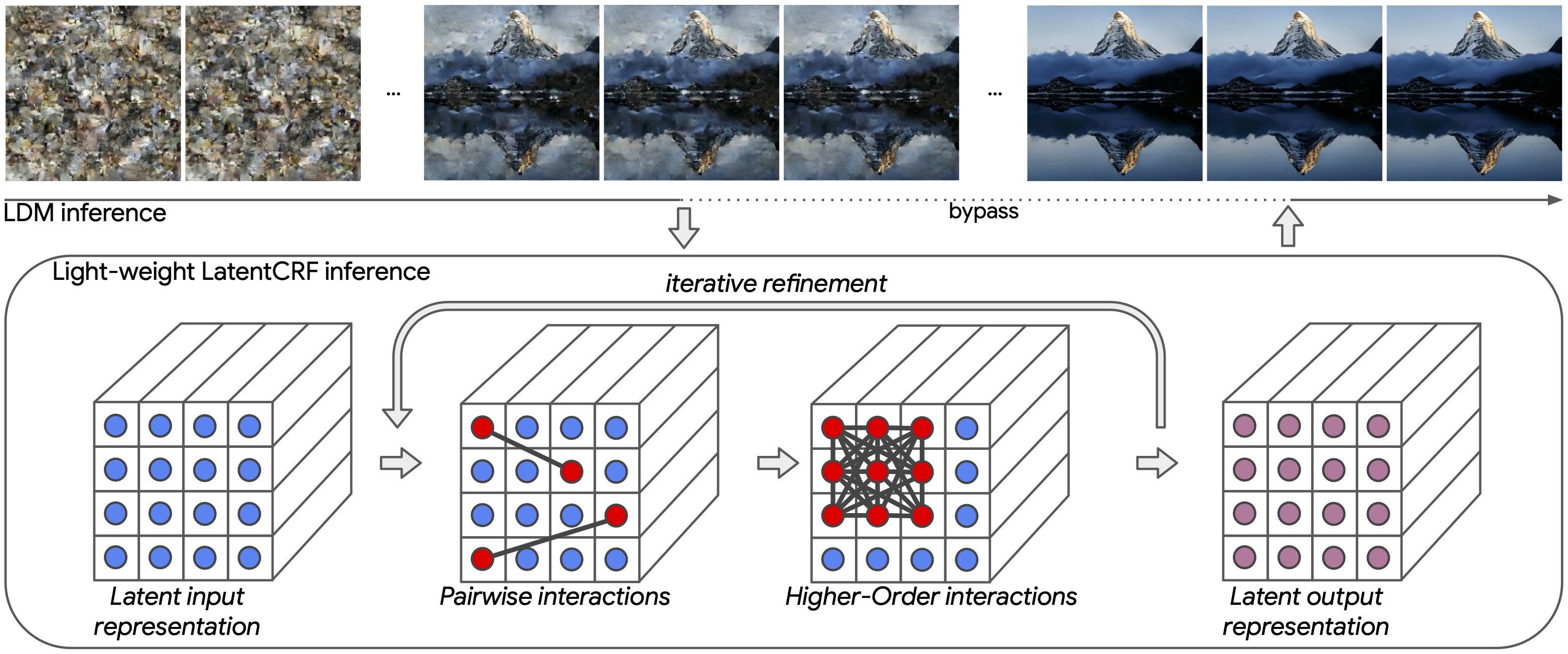

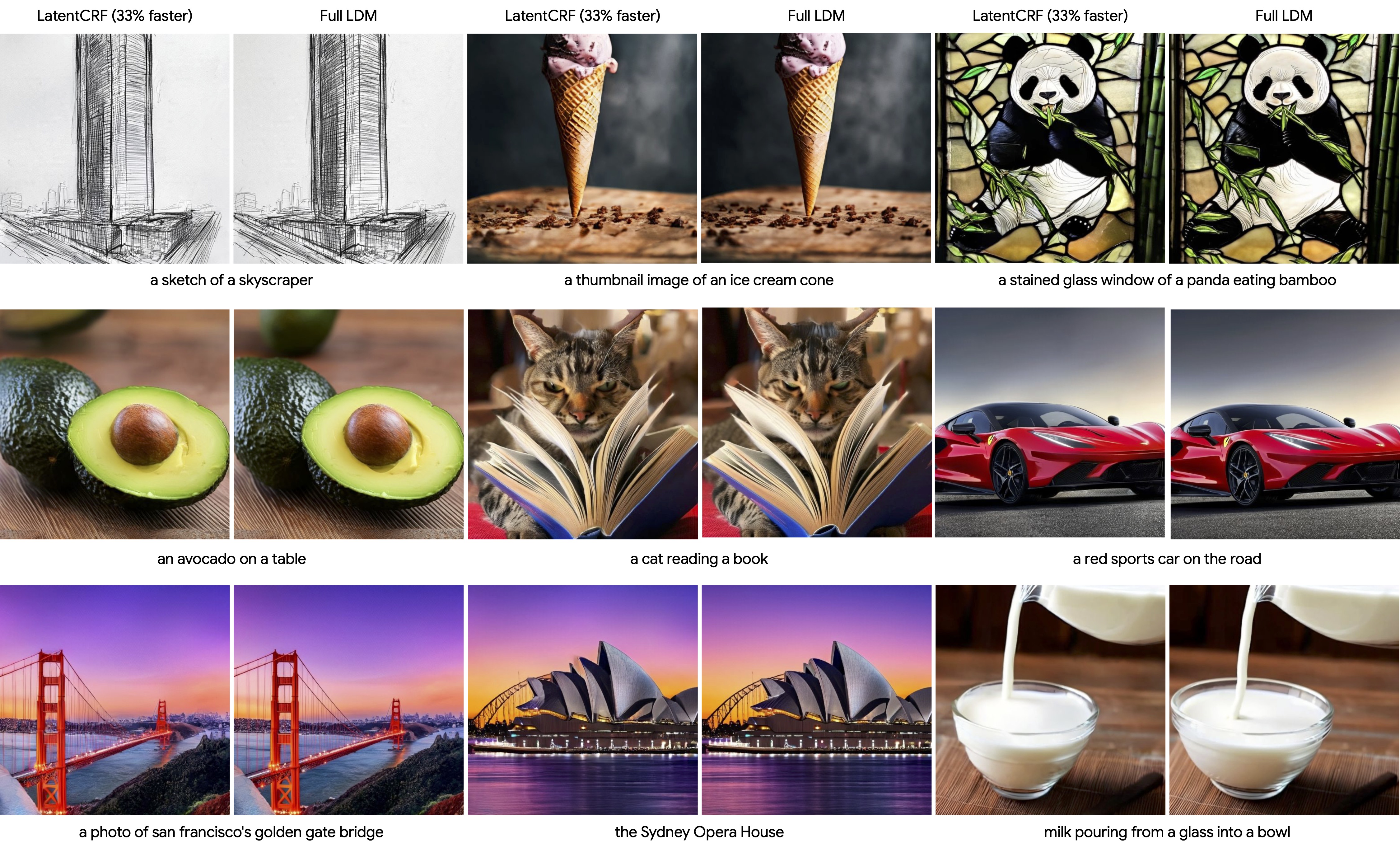

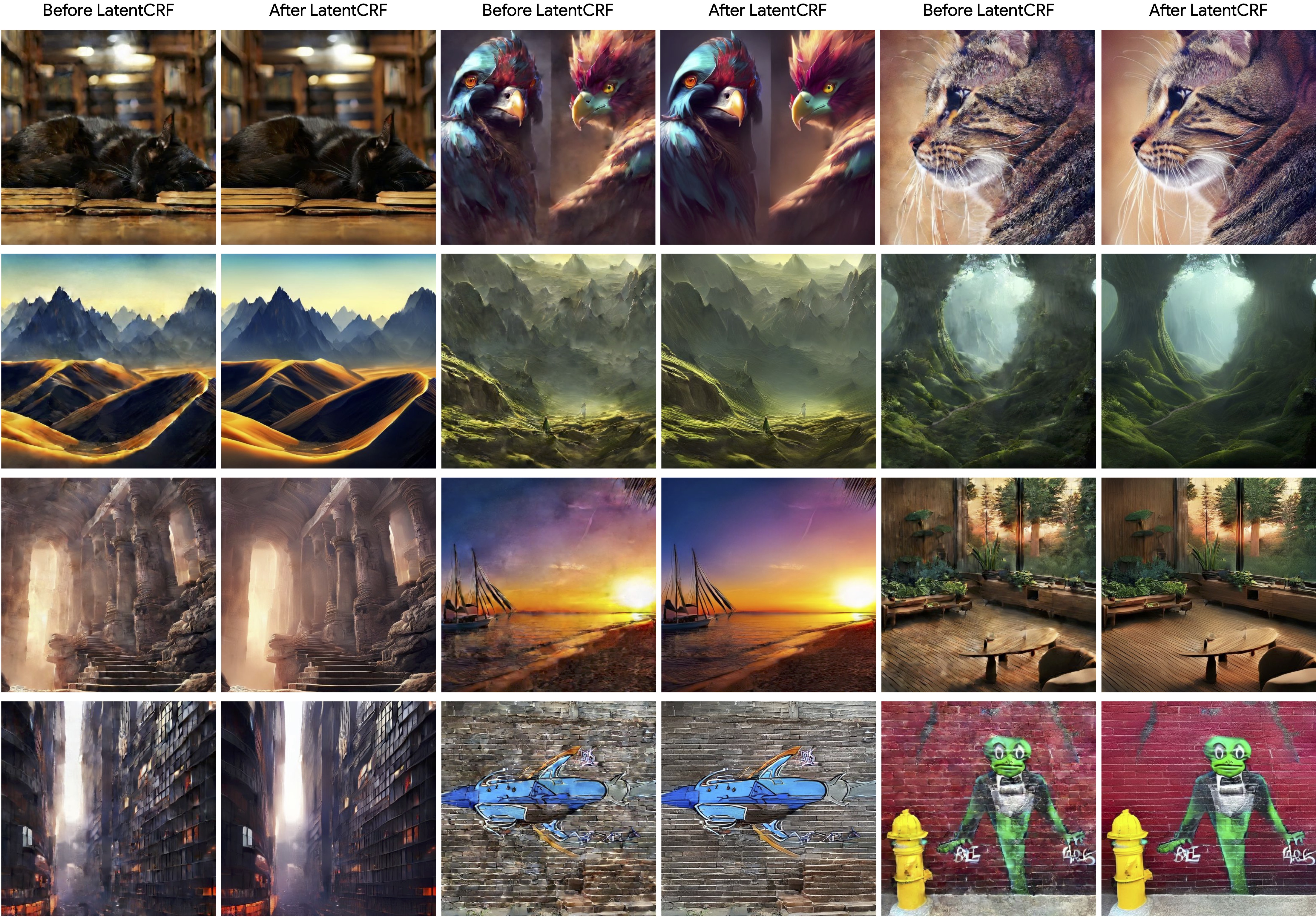

Latent Diffusion Models (LDMs) produce high-quality, photo-realistic images, however, the latency

incurred by multiple costly inference iterations can restrict their applicability. We introduce

LatentCRF, a continuous Conditional Random Field (CRF) model, implemented as a neural network layer,

that models the spatial and semantic relationships among the latent vectors in the LDM. By replacing

some of the computationally-intensive LDM inference iterations with our lightweight LatentCRF, we

achieve a superior balance between quality, speed and diversity. We increase inference efficiency by

33% with no loss in image quality or diversity compared to the full LDM. LatentCRF is an easy

add-on, which does not require modifying the LDM.

|